We included 103 studies [10, 26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123,124,125,126,127] evaluating 21 methods (n = 51) [26, 29, 30, 32, 34, 38, 40,41,42,43, 51, 53, 54, 56, 61, 62, 65,66,67, 73, 75, 77, 79, 80, 82, 83, 85,86,87,88,89,90,91,92,93, 100,101,102, 105, 107, 109,110,111,112, 114, 115, 117, 122, 123, 126, 127] and 35 tools (n = 54) [10, 27, 28, 31, 33, 35,36,37, 39, 44,45,46,47,48,49,50, 52, 55, 57,58,59,60, 63, 64, 68,69,70,71,72, 74,75,76, 78, 81, 84, 94,95,96,97,98,99, 103, 104, 106, 108, 109, 113, 116, 118,119,120,121, 125, 128] (Fig. 2: PRISMA study flowchart). Table 3 provides an overview of the identified methods and tools. A total of 73 studies were validity studies (n = 70) [26,27,28,29,30,31,32,33,34,35, 38, 40, 44,45,46,47, 49, 50, 52, 53, 57,58,59, 61, 63,64,65,66,67, 70, 71, 76, 78,79,80, 82,83,84,85,86, 88,89,90,91,92, 95,96,97,98, 100, 102,103,104, 106,107,108,109,110,111,112,113, 115,116,117,118, 120,121,122,123, 125, 126] or usability studies (n = 3) [60, 68, 69] assessing a single method or tool, and 30 studies performed comparative analyses of different methods or tools [10, 36, 37, 39, 41,42,43, 48, 51, 54,55,56, 62, 72,73,74,75, 77, 81, 87, 93, 94, 99, 101, 105, 109, 114, 119, 127, 128]. Few studies prospectively evaluated methods or tools in a real-world workflow (n = 20) [10, 28, 33, 36, 47, 51, 68, 69, 78, 79, 89, 91, 95, 99, 106, 109, 113, 115, 126, 128], 7 studies of those used independent testing (by a different reviewer team) with external data [10, 36, 47, 95, 99, 113, 128].

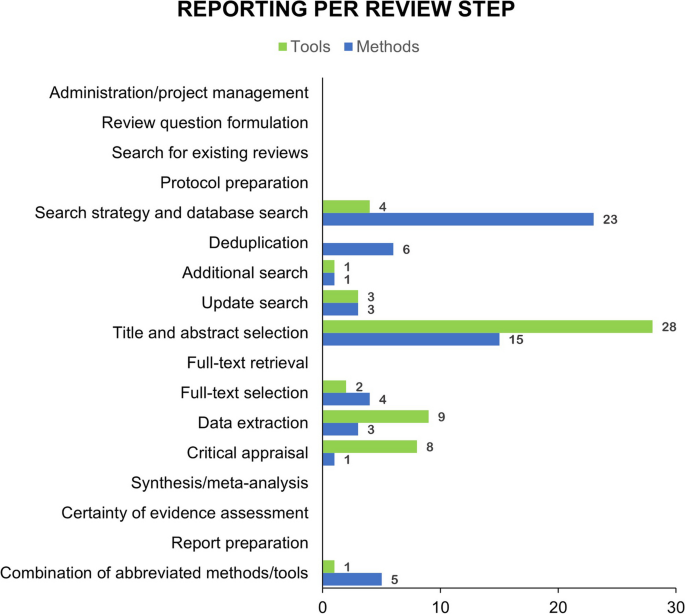

The majority of studies evaluated methods or tools for supporting the tasks of title and abstract screening (n = 42) [33, 36, 37, 39, 44,45,46, 48, 49, 52, 56, 59, 60, 64, 74, 80, 83,84,85, 87,88,89,90,91, 95,96,97, 101, 103, 106,107,108,109, 113,114,115, 118,119,120,121, 126, 128] or devising the search strategy and performing the search (n = 24) [29, 35, 38, 40, 43, 53, 54, 57, 61, 65,66,67, 73, 82, 92,93,94, 99, 100, 105, 111, 122, 123, 127] (see Fig. 3). For several steps of the SR process, only a few studies that evaluated methods or tools were identified: deduplication: n = 6 [31, 37, 55, 58, 72, 81], additional search: n = 2 [34, 98], update search: n = 6 [37, 51, 62, 78, 110, 112], full-text selection: n = 4 [86, 114, 115, 126], data extraction: n = 11 [32, 37, 47, 68, 70, 71, 75, 104, 113, 125, 126] (one study evaluated both a method and a tool [75]); critical appraisal: n = 9, [27, 28, 37, 50, 63, 69, 76, 102, 116], and combination of abbreviated methods/tools: n = 6 [10, 26, 77, 79, 101, 117] (see Fig. 3). No studies were found for some steps of the SR process, such as administration/project management, formulating the review question, searching for existing reviews, writing the protocol, full-text retrieval, synthesis/meta-analysis, certainty of evidence assessment, and report preparation. In Appendix 2, we summarize the characteristics of all the included studies.

Most studies reported on validity outcomes (n = 84, 46%) [10, 26,27,28,29,30,31,32,33,34,35, 39, 42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59, 62,63,64, 66, 67, 69,70,71,72,73,74,75,76, 78, 80, 81, 83,84,85,86,87,88,89,90,91, 94, 96, 97, 99, 101,102,103,104,105,106,107,108,109,110,111,112,113,114, 116,117,118,119,120,121,122,123,124,125, 127], while outcomes such as workload saving (n = 35, 19%) [10, 28, 29, 32, 33, 39, 44,45,46, 48, 49, 52, 59, 64, 67, 84,85,86,87, 91, 95, 97, 103, 105,106,107,108,109, 111, 114, 115, 117, 119, 121, 127], time-saving (n = 24, 13%) [33,34,35, 39, 45, 47, 48, 51, 52, 70,71,72, 74, 75, 87, 91, 96,97,98,99, 104, 109, 125, 126]; impact on results (n = 23, 13%) [26, 32, 38, 40,41,42,43, 59, 61, 62, 65, 77, 79, 82, 92, 93, 100, 101, 111, 115, 117, 122, 127], usability (n = 13, 7%) [36, 37, 39, 60, 68, 69, 78, 94, 95, 97, 98, 121, 125] and cost-saving (n = 3, 2%) [33, 83, 114] were less evaluated (Fig. 4: Outcomes reported in the included studies). In Appendix 2, we map the efficiency and usability outcomes per tool and method against the review steps of the SR process.The included studies reported various validity outcomes (i.e., specificity, precision, accuracy) and time, costs, or workload savings to undertake the review. None of the studies reported the personnel effort saved.

Methods or tools for literature search

Search strategy and database search

Five tools (MeSH on Demand [94, 99], PubReMiner [94, 99], Polyglot Search Translator [35], Risklick search platform [57], and Yale MeSH Analyzer [94, 99]) and three methods (abbreviated search strategies for study type [53, 73, 111], topic [123], or search date [43, 127]; citation-based searching [29, 66, 67]; search restrictions for database [54, 93, 105, 122] and language (e.g. only English articles) [38, 40, 61, 65, 82, 92, 100]) were evaluated in 24 studies [29, 35, 38, 40, 43, 53, 54, 57, 61, 65,66,67, 73, 82, 92,93,94, 99, 100, 105, 111, 122, 123, 127] to support devising search strategies and/or performing literature searches.

Tools for search strategies

Using text mining tools for search strategy development (MeSH on Demand, PubReMiner, and YaleMeSH Analyzer) reduced time expenditure compared to manual searches, with tools saving over half the time required for manual searches (5 h, standard deviation [SD = 2] vs. 12 h [SD = 8]) [99]. Using supportive tools such as Polyglot Search Translator [35], MeSH on Demand, PubReMiner, and YaleMeSH Analyzer [94, 99] was less sensitive [99] and showed a slightly reduced precision compared to manual searches (11% to 14% vs. 15%) [94]. The Risklick search platform demonstrated a high precision for identifying clinical trials (96%) and COVID-19–related publications (94%) [57].

User ratings by the study authors indicated that PubReMiner and YaleMeSH Analyzer were considered “useful” or “extremely useful,” while MeSH on Demand received the rating “not very useful” on a 5-point Likert scale (from extremely useful to least useful) [94].

Abbreviated search strategies for study type, topic, or search date

Two studies evaluated an abbreviated search strategy (i.e., Cochrane highly sensitive search strategy) [53] and a brief RCT strategy [111] for identifying RCTs. Both achieved high sensitivity rates of 99.5% [53] and 94% [111] while reducing the number of records requiring screening by 16% [111]. Although some RCTs were missed using abbreviated search strategies, there were no significant differences in the conclusions [111].

One study [123] assessed an abbreviated search strategy using only the generic drug name to identify drug-related RCTs, achieving high sensitivities in both MEDLINE (99%) and Embase (99.6%) [123].

Lee et al. (2012) evaluated 31 search filters for SRs and meta-analyses, with the health-evidence.ca Systematic Review search filter performing best, maintaining a high sensitivity while reducing the number of articles needing for screening (90% in MEDLINE, 88% in Embase, 90% in CINAHL) [73].

Furuya-Kanamori et al. [43] and Xu et al. [127] investigated the impact of restricting search timeframes on effect estimates and found that limiting searches to the most recent 10 to 15 years resulted in minimal changes in effect estimates (< 5%) while reducing workload by up to 45% [43, 127]. Nevertheless, this approach missed 21% to 35% of the relevant studies [43].

Citation-based searching

Three studies [29, 66, 67] assessed whether citation-based searching can improve efficiency in systematic reviewing. Citation-based searching achieved a reduction in the number of retrieved articles (50% to 89% fewer articles) compared to the original searches while still capturing a substantial proportion of 75% to 82% of the included articles [29, 67].

Restricted database searching

Seven studies assessed the validity of restricted database searching and suggested that searching at least two topic-related databases yielded high recall and precision for various types of studies [30, 41, 42, 54, 93, 105, 122].

Preston et al. (2015) demonstrated that searching only MEDLINE and Embase plus reference list checking identified 93% of the relevant references while saving 24% of the workload [105]. Beyer et al. (2013) emphasized the necessity of searching at least two databases along with reference list checking to retrieve all included studies [30]. Goossen et al. (2018) highlighted that combining MEDLINE with CENTRAL and hand searching was the most effective for RCTs (Recall: 99%), while for nonrandomized studies, combining MEDLINE with Web of Science yielded the highest recall (99.5%) [54]. Ewald et al. (2022) showed that searching two or more databases (MEDLINE/CENTRAL/Embase) reached a recall of ≥ 87.9% for identifying mainly RCTs [42]. Additionally, Van Enst et al. (2014) indicated that restricting searches to MEDLINE alone might slightly overestimate the results compared to broader database searches in diagnostic accuracy SRs (relative diagnostic odds ratio: 1.04; 95% confidence interval [CI], 0.95 to 1.15) [122]. Nussbaumer-Streit et al. (2018) and Ewald et al. (2020) found that combining one database with another or with searches of reference lists was noninferior to comprehensive searches (2%; 95% CI, 0% to 9%; if opposite concusion was of concern) [93] as the effect estimates were similar (ratio of odds ratios [ROR] median: 1.0 interquartile range [IQR]: 1.0–1.01) [41].

Restricted language searching

Seven studies found that excluding non-English articles to reduce workload would minimally alter the conclusions or effect estimates of the meta-analyses. Two studies found no change in the overall conclusions [61, 92], and five studies [38, 61, 65, 92, 100] reported changes in the effect estimates or statistical significance of the meta-analyses. Specifically, the statistical significance of the effect estimates changed in 3% to 12% of the meta-analyses [38, 61, 65, 92, 100].

Deduplication

Six studies [31, 37, 55, 58, 72, 81] compared eleven supportive software tools (ASySD, EBSCO, EndNote, Covidence, Deduklick, Mendeley, OVID, Rayyan, RefWorks, Systematic Review Accelerator, Zotero). Manual deduplication took approximately 4 h 45 min, whereas using the tools reduced the time by 4 h 42 min to only 3 min [72]. False negative duplicates varied from 36 (Mendeley) to 258 (EndNote), while false positives ranged from 0 (OVID) to 43 (EBSCO) [72]. The precision was high with 99% to 100% for Deduklick and ASySD, and the sensitivity was highest for Rayyan (ranging from 99 to 100%) [55, 58, 81], followed by Covidence, OVID, Systematic Review Accelerator, Mendeley, EndNote, and Zotero [55, 58, 81]. However, Cowie et al. reported that the Systematic Review Accelerator received a low rating of 9/30 for its features and usability [37].

Additional literature search

Paperfetcher, identified as an application to automate additional searches as handsearching and citation searching, saved up to 92.0% of the time compared to manual handsearching and reference list checking, though validity outcomes for Paperfetcher were not reported [98]. Additionally, the Scopus approach, in which reviewers electronically downloaded the reference lists of relevant articles and screened only new references dually, saved approximately 62.5% of the time compared to manual checking [34].

Update literature search

We identified one tool (RobotReviewer LIVE) [37, 78] and five methods (Clinical Query search combined with PubMed-related articles search, Clinical Query search in MEDLINE and Embase, searching the McMaster Premium LiteratUre Service [PLUS], PubMed similar articles search, and Scopus citation tracking) [51, 62, 110, 112] for improving the efficiency of updating literature searches. RobotReviewer LIVE showed a precision of 55% and a high recall of 100% [78] with limitations including search restricted to MEDLINE, consideration of only RCTs, and low usability scores for features [37, 78].

The Clinical Query (CQ) search, combined with the PubMed-related articles search and the CQ search in MEDLINE and Embase, exhibited high recall rates ranging from 84 to 91% [62, 110, 112], while the PLUS database had a lower recall rate of 23% [62]. The PubMed similar articles search and Scopus citation tracking had a low sensitivity of 25% each, with time-saving percentages of 24% and 58%, respectively [51]. However, the omission of studies from searching the PLUS database only did not significantly change the effect estimates in most reviews (ROR: 0.99; 95% CI, 0.87 to 1.14) [62].

Methods or tools for study selection

Title and abstract selection

We identified 42 studies evaluating 14 supportive software tools (AbstrackR, ASReview, ChatGPT, Colandr, Covidence, DistillerSR, EPPI-reviewer, Rayyan, RCT classifier, Research screener, RobotAnalyst, SRA-Helper for EndNote, SWIFT-active screener, SWIFT-review) [33, 36, 37, 39, 44,45,46, 48, 49, 52, 59, 60, 64, 74, 84, 95,96,97, 103, 106, 108, 109, 113, 118,119,120,121, 128] using advanced text mining and machine and active learning techniques, and five methods (crowdsourcing using different [automation] tools, dual computer monitors, single-reviewer screening, PICO-based title-only screening, limited screening [review of reviews]) [56, 80, 83, 85, 87,88,89,90,91, 101, 107, 109, 114, 115, 126] for improving the title and abstract screening efficiency. The tested datasets ranged from 1 to 60 SRs and 148 to 190,555 records.

Tools for title and abstract selection

Various tools (e.g., EPPI-Reviewer, Covidence, DistillerSR, and Rayyan) offer collaborative online platforms for SRs, enhancing efficiency by managing and distributing screening tasks, facilitating multiuser screening, and tracking records throughout the review process [129].

In a semiautomated tool, the tool provide suggestions or probabilities regarding the eligibility of a reference for inclusion in the review, but human judgment is still required to make the final decision [7, 10]. In contrast, in a fully automated system, the tool makes the final decision without human intervention based on predetermined criteria or algorithms. Some tools provide fully automated screening options (e.g., DistillerSR), semiautomated (e.g., RobotAnalyst), or both (e.g., AbstrackR, DistillerAI) using machine learning or natural language processing methods [7, 10] (see Table 1).

Among the eleven semi- and fully -automated tools (AbstrackR [37, 39, 45, 46, 48, 49, 52, 60, 74, 108, 109, 119], ASReview [84, 96, 103, 121], ChatGPT [113], Colandr [39], DistillerSR [37, 44, 48, 59], EPPI-reviewer [37, 60, 119, 128], Rayyan [36, 37, 39, 74, 95, 97, 120, 128], RCT classifier [118], Research screener [33], RobotAnalyst [36, 37, 48, 106, 109], SRA-helper for EndNote [36], SWIFT-active screener [37, 64], SWIFT-review [74]). ASReview [84, 96, 103, 121] and Research Screener [33] demonstrated a robust performance, identifying 95% of the relevant studies while saving 37% to 92% of the workload. SWIFT-active screener [37, 64], RobotAnalyst [36, 37, 48, 106, 109], and Rayyan [36, 37, 95, 97, 120] also performed well, identifying 95% of the relevant studies with workload savings from 34 to 49%. EPPI-Reviewer identified all the relevant abstracts after screening 40% to 99% of the references across reviews [119]. DistillerAI showed substantial workload savings of 53% while identifying 95% of the relevant studies [59], with varying degrees of validity [44, 48, 59]. Colandr and SWIFT-Review exhibited sensitivity rates of 65% and 91%, respectively, with 97% workload savings and around 400 min of time saved [39, 74]. ChatGPT’s sensitivity was 100% [113] and RCT classifiers recall was 99% [118]; workload or time savings were not reported [113, 118]. AbstrackR showed a moderate performance with a potential workload savings from 4% up to 97% [39, 45, 46, 48, 49, 52, 108, 109, 119] while missing up to 44% of the relevant studies [39, 46, 49, 52, 109]. Covidence and SRA-Helper for EndNote did not report validity outcomes.

Most of the supportive software tools were easy to use or learn, suitable for collaboration, and straightforward for inexperienced users (ASReview, AbstarckR, Covidence, SRA-Helper, Rayyan, RobotAnalyst) [36, 37, 45, 60, 95, 97, 121]. Other tools were more complex regarding their usability but were useful for large and complex projects (DistillerSR, EPPI-Reviewer) [37, 48, 60]. Poor interface quality (AbstrackR) [60], issues with help section/response time (RobotAnalyst, Covidence, EPPI) [36, 60], and overloaded side panel (Rayyan) [36] were weaknesses reported in the studies.

Methods for title and abstract selection

Among the four methods identified (dual computer monitors, single-reviewer screening, crowdsourcing using different [automation] tools, and limited screening [review of reviews, PICO-based title-only screening, title-first screening]) [56, 80, 83, 85, 87,88,89,90,91, 101, 114, 115, 126] for supporting title and abstract screening, crowdsourcing in combination with screening platforms or machine learning tools demonstrated the most promising performance in improving efficiency. Studies by Noel-Storr et al. [83, 88,89,90,91] found that the Cochrane Crowd plus Screen4Me/RCT classifier achieved a high sensitivity ranging from 84 to 100% in abstract screening and reduced screening time. Crowdsourcing via Amazon Mechanical Turk yielded correct inclusions of 95% to 99% with a substantial cost reduction of 82% [83]. However, the sensitivity was moderate when the screening was conducted manually by medical students or on web-based platforms (47% to 67%) [87].

Single-reviewer screening missed 0% to 19% of the relevant studies [56, 101, 114, 115] while saving 50% to 58% of the time and costs, respectively [114]. The findings indicate that single-reviewer screening by less-experienced reviewers could substantially alter the results, whereas experienced reviewers had a negligible impact [101].

Limited screening methods, such as reviews of reviews (also known as umbrella reviews), exhibited a moderate sensitivity (56%) and significantly reduced the number of citations needed to screen [109]. Title-first screening and PICO-based title screening demonstrated an accurate validity, with a recall of 100% [80, 108] and a reduction in screening effort ranging from 11 to 78% [108]. However, screening with dual computer monitors did not notably improve the time saved [126].

Full-text selection

For full-text screening, we identified three methods: crowdsourcing [86], using dual computer monitors [126], and single-reviewer screening [114, 115]. Using crowdsourcing in combination with the CrowdScreenSR saved 68% of the workload [86]. With dual computer monitors, no significant difference of time taken for the full-text screening was reported [126]. Single-reviewer screening missed 7% to 12% of the relevant studies [115] while saving only 4% of the time and costs [114].

Methods or tools for data extraction

We identified 11 studies evaluating five tools (ChatGPT [113], Data Abstraction Assistant (DAA) [37, 68, 75], Dextr [125], ExaCT [47, 71, 104] and Plot Digitizer [70]) and two methods (dual computer monitors [126] and single data extraction [32, 75]) to expedite the data extraction process.

ExaCT [47, 71, 104], DAA [37, 68, 75], Dextr [125], and Plot Digitizer [70] achieved a time reduction of up to 60% [104, 125], with precision rates of 93% for ExaCT [71, 104] and 96% for Dextr and an error rate of 17% for DAA [68, 75]. Manual extraction by two reviewers and with the assistance of Plot Digitizer showed a similar agreement with the original data, showing a slightly higher agreement with the assistance of Plot Digitizer (Plot Digitizer: 73% and 75%, manual extraction: 66% and 69%) [70]. A total of 87% of manually extracted data elements matched with ExaCT, resulting in qualitatively altered meta-analysis results [104]. ChatGPT demonstrated consistent agreement with human researchers across various parameters (κ = 0.79–1) extracted from studies, such as language, targeted disease, natural language processing model, sample size, and performance parameters, and moderate to fair agreement for clinical task (κ = 0.58) and clinical implementation (κ = 0.34) [113]. Usability was assessed only for DAA and Dextr, with both tools deemed very easy to use [68, 125], although DAA scored lower on feature scores, while Dextr was noted for its flexible interface [125].

Single data extraction and dual monitors reduced the time for extracting data by 24 to 65 min per article [32, 75, 126], with similar error rates between single and dual data extraction methods (single: 16% [75], 18% [32], dual: 15% [32, 75]) and comparable pooled estimates [32, 75].

Methods or tools for critical appraisal

We identified nine studies reporting on one software tool (RobotReviewer) [27, 28, 37, 50, 63, 69, 76, 116] and one method (crowdsourcing via CrowdCARE) [102] for improving critical appraisal efficiency. Collectively, the study authors suggested that RobotReviewer can support but not replace RoB assessments by humans [27, 28, 50, 63, 69, 76] as performance varied per RoB domain [27, 50, 63, 116]. The authors reported similar completion times for RoB appraisal with and without RobotReviewer assistance [28]. Reviewers were equally as likely to accept RobotReviewer’s judgments as one another’s during consensus (83% for RobotReviewer, 81% for humans) [69], showing similar accuracy (RobotReviewer assisted RoB appraisal: 71%, RoB appraisal by two reviewers: 78%) [28, 76]. The reviewers generally described the tool as acceptable and useful [69], whereby collaboration with other users is not possible [37].

Combination of abbreviated methods/tools

Five studies evaluated RR methods [26, 77, 79, 101, 117], and one study evaluated various tools [10] combining multiple review steps. While two case studies found no differences in findings between RR and SR approaches [79, 117], another author/paper/study found in two of three RRs that no conclusion could be drawn due to insufficient information [26]. Additionally, in a study including three RRs, RR methods affected almost one-third of the meta-analyses with less precise pooled estimates [101]. Marshall et al. (2019) included 2,512 SRs and reported a loss of all data in 4% to 45% of the meta-analyses and changes of 7% to 39% in the statistical significance due to RR methods [77]. Automation tools (SRA- Deduplicator, EndNote, Polyglot Search Translator, RobotReviewer, SRA-Helper) reduced the person-time spent on SR tasks (42 h versus 12 h) [10]. However, error rates, falsely excluded studies, and sensitivity varied immensely across studies [26, 117].

Add Comment