Search results

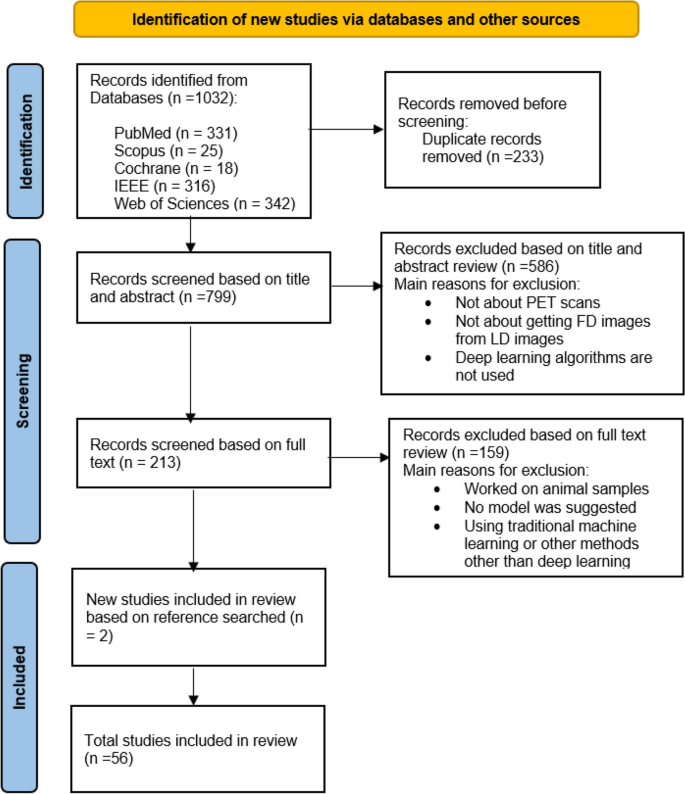

The initial systematic search identified 1032 studies. After removing the duplicates, 799 articles were retrieved for title and abstract assessment, and 213 articles were selected for full-text evaluation. 159 articles were excluded if they did not have a proposed architecture, they only compared different architectures, or their dataset was animal. Finally, 56 articles published between 2017 and 2023 were included in this systematic review, two of which were obtained from the reference search of articles by snowballing. The aim of all studies was to demonstrate the potential of LD to FD conversion by deep learning algorithms. The flowchart of selection for included studies is demonstrated in Fig. 1.

Quality assessment

Table 2 presents a summary of the quality assessment for the included studies using the CLAIM tool.

Dataset characteristics

The studies obtained have conducted both prospective and retrospective analyses. Among these, Studies [16, 26,27,28,29, 37, 40, 41, 64, 66] were retrospective, while the others were prospective. The analyses involved varying sizes of datasets, with the study by Kaplan et al. having the smallest dataset, consisting of only 2 patients. The majority of the studies analyzed fewer than 40 patients. However, the studies with the most substantial sample sizes were numbers [7, 66] and [54], which included 311 and 587 samples, respectively. (Please refer to Table 3 for further details, and additional information is available in the Supplementary material).

Datasets may be real world data or simulated as in the work of [30, 53, 60], including normal, diseased, or both subjects in different body regions. In this regard, the patients were scanned from the brain [12, 13, 15, 17, 18, 20, 21, 26,27,28,29, 31, 39,40,41, 46, 52, 53, 55, 60, 64, 65, 68], lung [19, 21, 25, 34], bowel [44], thorax [34, 45], breast [50], neck [57], abdomen [63] regions, and twenty two studies were conducted on whole body images [14, 16, 22, 23, 32, 33, 35,36,37,38, 42, 43, 48, 49, 51, 54, 58, 59, 61, 62, 66, 67]. PET data is acquired through the use of various scanners and the administration of different radiopharmaceuticals such as 18F-FDG, 18F-florbetaben, 68 Ga-PSMA, 18F-FE-PE2I, 11C-PiB, 18F-FDG, 18F-AV45, 18F-ACBC, 18F-DCFPyL, or amyloid radiopharmaceuticals. These radiopharmaceuticals are injected into the participants at doses ranging from 0.2% up to 50% of the full dose in order to estimate FD PET images.

In these studies, the data are pre-processed in order to prepare them as input to the model, and in some articles, data augmentation has been used to compensate for the lack of sample data [13, 18, 19, 47].

Design

In order to synthesize high-quality PET images using deep learning techniques, it is necessary to train a model to learn mapping between LD and FD PET images.

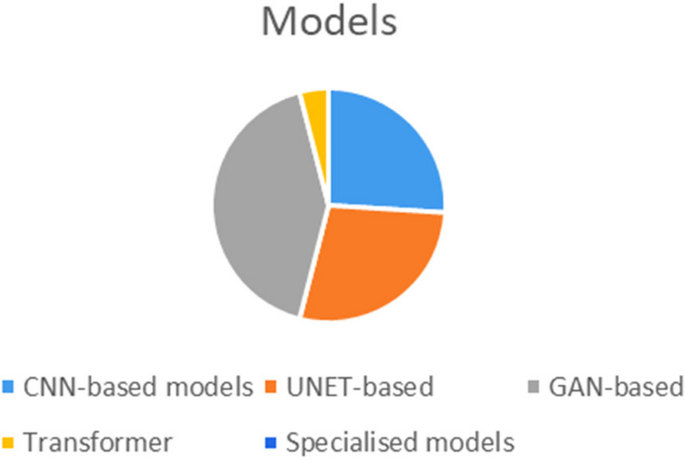

Several models based on CNN, UNET, generative adversarial network (GAN), have been proposed in various studies, with the GANs being the well-received method among them.

After a systematic literature review for medical imaging reconstruction and synthesis studies, this paper totally included thirteen CNN-based models ( [12,13,14, 21, 27, 31, 36, 42, 43, 51, 56, 58, 67]), fifteen UNET-based models ( [17, 19, 22, 26, 28, 37, 39, 40, 44, 45, 48, 55, 61, 62, 66]), twenty one GAN-based models ( [15, 16, 18, 20, 23,24,25, 29, 32, 33, 35, 38, 41, 46, 47, 49, 50, 52,53,54, 65]), two transformer models ( [60, 69]) and some other specialized models ( [48, 57, 59,60,61, 63, 68]) to discuss and reproduce for comparison. The frequency of models employed in the reviewed studies can be seen in Fig. 2.

To the best of our knowledge, Xiang et al. [12] were among the first to propose a CNN- based method in 2017 for FD PET image estimation called auto-context CNN. This approach combines multiple CNN modules following an auto-context strategy to iteratively refine the results. A residual CNN was developed by Kaplan et al. [14] in 2019, which integrated specific image features into the loss function to preserve edge, structural, and textural details for successfully eliminating noise from a 1/10th of a FD PET image.

In their work, Gong et al. [21] trained a kind of CNN using simulated data and fine-tuned it with real data to remove noise from PET images of the brain and lungs. In subsequent research, Wang et al. [36] conducted a similar study aimed at enhancing the quality of whole-body PET scans. They achieved this by employing a CNN in conjunction with corresponding MR images. Spuhler et al. [27] employed a variant of CNN with dilated kernels in each convolution, which improved the extraction of features. Mehranian et al. [30] proposed a forward backward splitting algorithm for Poisson likelihood and unrolled the algorithm into a recurrent neural network with several blocks based on CNN.

Researches has also demonstrated the strength of employing a CNN with a UNET structure for the production of high-fidelity PET images. Xu et al. [13] demonstrated in 2017 that a UNET network can be utilized to accurately map the difference between the LD-PET image and the reference FD-PET image by administering only a 1/200th of the injection. Notably, the skip connection of the UNET was specifically utilized to improve the efficient learning of image details. Chen et al. (2019) [17] suggested to combine both LD PET and multiple MRI as conditional inputs for the purpose of producing high quality and precise PET images utilizing a UNET architecture. Cui et al. [22] proposed an unsupervised deep learning method by UNET structure for PET image denoising, where the patient’s MR prior image is used as the network input and the noisy PET image is used as the training label. Their method does not require any high-quality images as training labels, nor any prior training or large datasets. In their study, Lu et al. [19] demonstrated that utilizing just 8 LD images of lung cancer patients generated from 10% of the corresponding FD images to train a 3D UNET model resulted in significant noise reduction and reduced bias in the detection of lung nodules. Sanaat et al. (2020) introduced a slightly different approach [26], demonstrating that by using UNET for learning a mapping between the LD-PET sinogram and the FD PET sinogram, it is possible to achieve some improvements in the reconstructed FD PET images. Also, by using UNET structure, Liu et al. [37] were able to reduce the noise of clinical PET images for very obese people to the noise level of thin people. The proposed model by Sudarshan et al. [40] uses UNET that incorporates the physics of the PET imaging system and the heteroscedasticity of the residuals in its loss function, leading to improved robustness to out-of-distribution data. In contrast to previous research that focused on specific body regions, Zhang et al. [62] propose a comprehensive framework for hierarchically reconstructing total-body FD PET images. This framework addresses the diverse shapes and intensity distributions of different body parts. It employs a deep cascaded U-Net as the global total-body network, followed by four local networks to refine the reconstruction for specific regions: head-neck, thorax, abdomen-pelvic, and legs.

On the other hand, more researchers design GAN-like networks for SPET image estimation. GANs have a more complex structure and can solve some problems attributed to CNNs, such as generating blurry results, with their structural loss. For example, Wang et al. [15] (2018) developed a comprehensive framework utilizing 3D conditional GANs with adding skip links to the original UNET network. Further their study in 2019 [20] specifically focused on multimodal GANs and local adaptive fusion techniques to enhance the fusion of multimodality image information in a more effective manner. Unlike two-dimensional (2D) models, the 3D convolution operation implemented in their framework prevents the emergence of discontinuous cross-like artifacts. According to a study conducted by Ouyang et al. (2019) [18], which employs a GAN architecture with a pretrained amyloid status classifier utilizing feature matching in the discriminator can produce comparable results even in the absence of MR information. Gong et al. implemented a GAN architecture called PT-WGAN [23], which utilizes a Wasserstein Generative Adversarial Network to denoise LD PET images. The PT-WGAN framework uses a parameter transfer strategy to transfer the parameters of a pre-trained WGAN to the PT-WGAN network. This allows the PT-WGAN network to learn from the pre-trained WGAN and improve its performance in denoising LD PET images. Hu et al. [35]in a similar work use Westerian GAN to directly predict the FD PET image from low-dose PET sinogram data. Xue et al. developed a deep learning method to recover high-quality images from LD PET scans using a conditional GAN model. The model was trained on 18F-FDG images from one scanner and tested on different scanners and tracers. Zhou et al. [49] proposed a novel segmentation guided style-based generative adversarial network for PET synthesis. This approach leverages 3D segmentation to guide the GANs, ensuring that the generated PET images are accurate and realistic. By integrating style-based techniques, the method enhances the quality and consistency of the synthesized images. Fujioka et al. [50] applies the pix2pix GAN to improve the image quality of low-count dedicated breast PET images. This is the first study to use pix2pix GAN for dedicated breast PET image synthesis, which is a challenging task due to the high noise and low resolution of dedicated breast PET images. In a similar work by Hosch et al. [54], the framework of image-to-image translation was used to generate synthetic FD PET images from the ultra-low-count PET images and CT images as inputs and employed group convolution to process them separately in the first layer. Fu et al. [65] introduced an innovative GAN architecture known as AIGAN, designed for efficient and accurate reconstruction of both low dose CT and LD PET images. AIGAN uses a combination of three modules: a cascade generator, a dual-scale discriminator, and a multi-scale spatial fusion module. This method enhances the images in stages, first making rough improvements and then refining them with attention-based techniques.

Recently, there have been articles that highlight the Cycle-GAN model as a variation of the GAN framework. Lei et al., [16] used a Cycle-GAN model to accurately predict FD whole-body 18F-FDG PET images using only 1/8th of the FD inputs. In another study [32], in 2020 they used a similar approach incorporating CT images into the network to aid the process of PET image synthesis from LD on a small dataset consisting of 16 patients. Additionally, in 2020, Zhou et al. [25] proposed a supervised deep learning model rooted in Cycle-GAN for the purpose of PET denoising. Ghafari et al. [47] introduced a Cycle-GAN model to generate standard scan-duration PET images from short scan-duration inputs. The authors evaluated model performance on different radiotracers with different scan durations and body mass indexes. They also report that the optimal scan duration level depends on the trade-off between image quality and scan efficiency.

Some other architecture according to our knowledge by Zhou [61] and et al. proposed a federated transfer learning (FTL) framework for LD PET denoising using heterogeneous LD data. The authors mentioned that their method using a UNET network can efficiently utilize heterogeneous LD data without compromising data privacy for achieving superior LD PET denoising performance for different institutions with different LD settings, as compared to previous FL methods. In a different work, Yie et al. [29] applied the Noise2Noise technique, which is a self-supervised method, to remove PET noise. Feng (2020) et al. [31] presented a study using CNN and GAN for PET sinograms denoising and reconstruction respectively.

The architecture chosen for training can be trained with different configurations and input data types. In the following, we review the types of inputs used in the extracted articles including 2D, 2.5D, 3D, multi-channel and multi-modality. According to our findings, there have been several studies conducted on 2D inputs (single-slice or patch) [12, 14, 17, 21, 24, 25, 27, 29, 47, 50, 52, 53, 56, 57, 67, 68]. In these studies, researchers extracted slices or patches from 3D images and treated them separately for training the model. 2.5 dimensional model (multi-slice) involves stacking adjacent slices for incorporating morphologic information [13, 18, 36, 40, 42,43,44, 46, 48, 54, 61, 65]. Studies that train models on a 2.5D multi-slice inputs differ from those utilizing a 3D convolution network. The main difference between 2.5D and 3D inputs is the way in which the data is represented. 3D approach employs the depth-wise operation and occurs when the whole volume is considered as input. 16 studies investigated 3D training approach [15, 16, 19, 20, 22, 23, 32, 34, 37, 41, 45, 49, 51, 55, 62, 66]. Multi-channel input refers to input data with multiple channels, where each channel represents a different aspect of the input. By processing each channel separately before combining them later on, the network can learn to capture unique information from each channel that is relevant to the task at hand. Papers [12, 68] used this technique as input to their model, enabling the network to learn more complex relationships between data features. Additionally, some researchers utilized multi-modality data to provide a complete and effective information for their models. For instance, combining structural information obtained from CT [32, 45, 54, 62, 66] and MRI [12, 17, 20, 36, 40, 42, 52, 56, 57] scans with anatomical/functional information from PET images contributes to better image quality.

The choice of loss function is another critical setting in deep neural networks because it directly affects the performance and quality of the model. Different loss functions prioritize different aspects of the predictions, such as accuracy, smoothness, or sparsity. Among the reviewed articles, the Mean Squared Error (MSE) loss function has been chosen the most [12, 26, 29, 32, 44, 60, 62, 66, 67], while the L1 and L2 functions have only been used in eight studies [13, 15, 17, 20, 27, 39, 48, 57, 58] and five studies [19, 22, 37, 61, 68], respectively. While MSE (as a variant of L2) emphasizes larger errors, The mean absolute error (MAE) loss (as a variant of L1) focuses on the average magnitude of errors by calculating the absolute difference between corresponding pixel values [55]. The Huber loss function combines the benefits of both MSE and MAE by incorporating both squared and absolute differences [45].

The problem of blurry network outputs with the MSE loss function led to the adoption of perceptual loss as a training loss function [21, 31, 56]. This loss function is based on features extracted from a pretrained network, which can better preserve image details compared to the pixel-based MSE loss function. The use of features such as gradient and total variation, with the MSE in the loss function was another method that was used to preserve the edge, structural details, and natural texture [14]. To solve the problems of adversarial learning in relation to hallucinated structures and instability in training as well as synthesizing images of high-visual quality while not matching with clinical interpretations, The optimization loss functions, including pixel-wise L1 loss, structured adversarial loss [49, 50], and task-specific perceptual loss ensure that images generated by the generator closely match the expected value of the features on the intermediate layers of the discriminator [18]. In supervised fashion of Cycle-GANs that LD PET and FD one is paired, four type of losses employed, including adversarial loss, cycle-consistency loss, identity loss, and supervised learning loss [24, 25, 47]. Different combinations of loss functions can be used for different purposes to guide the model to generate images or volumes that are similar to the ground truth data [32, 35, 40, 41, 46, 52, 53, 56, 65].

At the end for validation of models, studies utilized different datasets for validation, including external datasets and cross-validation on the same training dataset.

Evaluation metrics

In order to assess the effectiveness of synthesizing PET images, two methods were employed: quantitative evaluation of image quality and qualitative evaluation of predicted FD images from LD images. Various denoising techniques were utilized to measure image-wise similarity, structural similarity, pixel-wise variability, noise, contrast, colorfulness and signal-to-noise ratio between estimated PET images and their corresponding FDPET images. Studies shows that peak signal to noise ratio (PSNR) was the most popular metrics used for quantitative image evaluation. Other methods include the normalized root mean square error (NRMSE), structural similarity index metrics (SSIM), Normalized mean square error (NMSE), root mean square error (RMSE), Frequency-based blurring measurement (FBM), edge-based blurring measurement (EBM), contrast recovery coefficient (CRC), contrast-to-noise ratio (CNR), signal to noise ratio (SNR). Additionally, mean and maximum standard uptake value (SUVmean and SUVmax) bias were obtained for clinical semi-quantitative evaluation. Several studies have used physician evaluations to clinically assess PET images generated by different models, along with corresponding reference FD and LD PET images.

Add Comment