Setting

AKUHN is a 289-bed hospital in Nairobi, Kenya. It is a recognized center of radiology excellence for the horn of Africa being the first hospital in the region to receive the coveted Joint Commission International Accreditation (JCIA). It is home to Radiology 14 faculty members (1 Associate and 6 Assistant Professors, 7 senior instructors) as well as 6 instructors and a part-time physicist. To be designated as faculty member, a practitioner must have completed radiology residency training, have board recognition, and fellowship training. The radiology residency at AKUHN is a structured four-year program which enrolls up to 4 trainees every year. The radiology program is intensive with multiple diagnostic and interventional radiology scans and procedures performed every day. An average of 82,000 radiology examinations are conducted yearly at AKUHN. The radiology department has state-of-the-art imaging equipment including digital X-ray and fluoroscopy units, a digital mammography system with tomosynthesis, seven ultrasonography units, a 64-slice MDCT, a cone beam CT, 1.5T and 3T MRI scanners, a SPECT/CT and a PET/CT scanner with an on-site cyclotron, and an angiography suite.

Our clinical skills study was conducted in December 2020. It was approved by the Aga Khan University, Nairobi Institutional Ethics Review Committee. All study participants gave written informed consent before participating in this study. The test takers were anonymized and given access to the Lifetrack tool which tested them on 37 abdominal CT scans over six 2-hour sessions. This was conducted between 7-9AM EAT in the AKUHN Radiology department with supervision by the residency program director (RN). The subjects in our study had undergone varying lengths of radiology training reflecting their time in the residency program which was recorded in a pre-test questionnaire embedded in the first testing session. All the data was collected and recorded in Microsoft Excel spreadsheets. After the test was concluded, all 37 cases were reviewed with the trainees by one of the authors (HV) during weekly online teaching sessions to maximize the educational benefit from each case.

Lifetrack

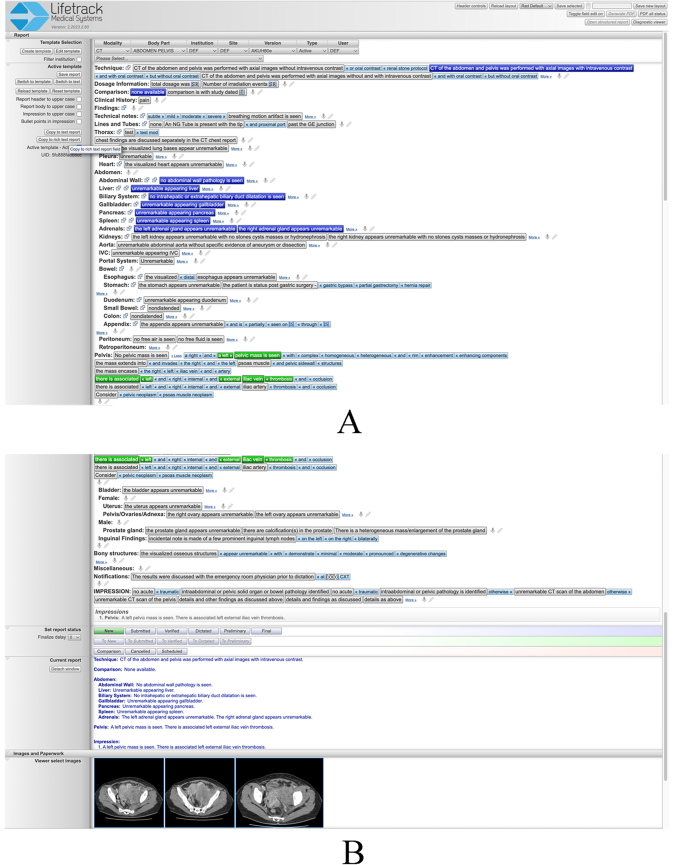

Lifetrack is an FDA-approved next generation distributed Radiology Information System (RIS)/PACS architecture produced by Lifetrack Medical Systems (https://www.lifetrackmed.com/). Lifetrack incorporates integrated decision support features and automatic feedback loops which enable efficient quality diagnosis by radiologists. The software is also used for radiologist training and capacity building in those countries where there is a scarcity of radiologists. The Lifetrack PACS System has a patented Active Template technology which is used for efficient reading of scans by practicing radiologists (Fig. 1A and B). It can also be used as a training system since the Active Templates allow each plain English sentence template block to be encoded with data such that any interpretive choice made by the radiology trainee while building a report can be turned into an automatically scorable “answer” in an Excel spreadsheet. Prior to the start of our study, a separate two-hour orientation session reproducing the exact testing environment was organized. It allowed for study participants to navigate as many example CT scans as necessary for them to become comfortable using the Lifetrack image viewer and to learn to generate reports by selecting segments of text as illustrated in Fig. 1A and B.

A& B: These two images capture an entire example report composed using the Lifetrack active report template for abdomen and pelvis CT examination. The template is selected at the top from a drop-down menu. The selectable elements of text are shown as tabs that can be pressed to constitute entire sentences identifiable in the current report visible at the bottom of Fig. 1B. If pressed once (blue highlight) the text in a tab will be displayed in the body of the report only. If pressed twice (green highlight), the text will be present in both the body and impression of the final report as in this case for the pelvic mass and venous thrombosis findings. An additional feature of the Lifetrack active report template is the ability to insert relevant images in the report as displayed in Fig. 1B

Selection of CT scans for the study

The list of pathologies for the examination was selected by the principal investigator (HV) and a junior radiology colleague (TN), from a textbook on emergency diagnostic radiology that encompasses the spectrum of diagnoses for an acute abdomen [9]. In order to maintain the set of CT cases to a manageable number while providing a representative sample of pathologies, only non-trauma abdominal and pelvic diagnoses were selected. CT scans of the abdomen and pelvis for the selected pathologies were identified from the Lifetrack server of clinically-documented anonymized teaching cases. A total of 36 cases of acute abdominal pathology were curated for the purpose of this CT interpretation skill evaluation. An additional normal CT scan of the abdomen and pelvis was included. Formal interpretations of the 37 scans, with respect to acute diagnosis and its CT imaging features, any secondary non-acute but concurrent pathology, and any incidental findings, were recorded by the authors (HV, TN) for subsequent scoring of trainees’ scan interpretations. All cases were organized in 5 sets of 6 cases and one set of 7 cases (containing the normal CT scan) loaded as 6 separate test sessions accessible on the teaching server of Lifetrack. All scans were of de novo pathologies so that neither prior scan comparisons nor additional clinical information (beyond acute abdominal pain) was necessary to perform an accurate scan interpretation.

In selecting the cases for the test (Table 1), care was taken to identify examples that were representative of the spectrum of presentations for the chosen pathologies and that the scans were performed using good imaging technique. Eleven of them included an important second non-acute diagnosis that trainees were expected to identify, characterize, and report. Incidental findings were wide-ranging as reported in Table 1.

The 37 scans were presented to the trainees in 6 sessions of two hours each, on 6 consecutive days. All cases carried the same clinical history of acute abdominal pain which was provided to the trainees. During each session the trainees accessed the corresponding Lifetrack test set using their reading workstations in the AKUHN Radiology department and loaded the cases. They reviewed the CT scans with the Lifetrack viewing tools and formulated an opinion with respect to the acute diagnosis depicted on each CT scan as well as any other concurrent pathology that may be present on the scan. This process was identical to the clinical viewing and interpretation environment deployed in any radiology reading room. Trainees entered their interpretation of each scan by composing a formal radiology report using the patented Lifetrack report generation system based on active templates in which segments of text can be selected to formulate a report (see example in Fig. 1A and B). This reporting system is designed to be exhaustive and provides the ability to create any report including typing or dictating free text if needed. The final report and its impression are a record of a trainee’s opinion of the primary acute abdominal diagnosis and may have included a secondary non-acute diagnosis (if present on the scan), and incidental findings as in any practicing radiologist’s report. All trainees were aware that their scan interpretations would be graded as part of this study and that for each scan they were expected to identify the acute diagnosis, any unrelated secondary diagnosis, incidental findings, and to avoid overcalling normal findings as pathology.

Each trainee was identified by a unique coded identifier that maintained their anonymity and enabled all of the trainee’s scan interpretations to be pooled across all six testing sessions. Each test CT scan was also labeled with a unique identifier for tracking of results and pooling of trainee performance for each case and pathology. A total of 13 trainees took part in the study all ranging in experience from 12 months to more than 4 years (three of the subjects had recently graduated from the residency training program).

Scores and scoring system

All reports generated by the trainees were reviewed and scored by a senior radiologist specialized in cross-sectional imaging (HV) based on a diagnostic consensus from the co-authors (HV, TN). Every case had four different interpretive components that each trainee was scored on: (a) acute diagnosis, (b) unrelated secondary diagnosis, (c) number of missed incidental findings, and (d) number of overcalls.

The acute/primary diagnosis score ranged from 0 (no credit) to 1 (full credit) based on how well the trainee identified the primary diagnosis, i.e. the main cause of abdominal pain the patient presented with. The patient’s urgent management depends on that diagnosis. Partial credit was given for identifying the scan abnormalities associated with this primary diagnosis. If this acute diagnosis contained several key imaging features on the CT scan, incremental credit was given if the trainee identified more of these features: for example, if the primary diagnosis had 4 imaging features and the trainee only identified 3 of them, they received a 0.75 credit for the acute/primary diagnosis. If the primary lesion/abnormality was identified but the correct diagnosis was only mentioned as part of a differential diagnostic list (DDx), an additional partial credit was given for the latter but inversely proportional to how many diagnoses were listed in the DDx. For example, in the third session (case 16), a trainee identified the splenic lesion and provided a DDx of abscess versus metastasis. Therefore 0.5 credit was granted for identifying the CT abnormality, but only 0.5/2 = 0.25 extra credit was earned for mentioning the correct diagnosis as part of a DDx, for a total score of 0.5 + 0.25 = 0.75. In contrast, a trainee who identified the splenic lesion but failed to characterize it (and did not provide a DDx that included the correct diagnosis), received an acute diagnosis score of 0.5 only. The significance of the acute diagnosis score is that it reveals the ability and thoroughness of the trainee to diagnose acute abdominal disease that they are likely to confront during overnight call duties and for which lack of correct diagnosis can have deleterious effects on patient care.

The unrelated secondary diagnosis score ranged from 0 to 1 and refers to an important other diagnosis that the trainee needs to make based on the CT scan provided but one that is not related to the primary diagnosis causing the acute abdominal pain. This unrelated secondary diagnosis would warrant further evaluation on a non-emergent basis. Trainees could get a partial score based on the answer provided. The significance of this secondary diagnosis score is that it tests the thoroughness of the trainee in identifying all medically important features of a CT scan and not stop at the acute diagnosis once established. It also addresses the ability of a trainee to distinguish medically important but non-urgent pathology from acutely urgent pathology.

Incidental findings are imaging findings on the CT scan that do not carry an urgent or medically important significance but that are meant to be identified and reported as they could be misinterpreted. They reveal the visual detection ability and the thoroughness of the trainee and their ability to not over diagnose benign incidental findings. The incidental findings score ranged from 0 to 1. It was scored as the percentage of the incidental findings that the trainee identified. A trainee received full credit of 1 for missing no incidental finding and the score was proportionately reduced depending on how many of the incidental findings they missed. For those CT cases without incidental finding no incidental finding score was recorded.

Number of overcalls could range from 0 to greater than 1 based on the number of overcalls that the trainee had made. An overcall is a wrong diagnosis or wrong interpretation of a scan finding. Trainees may also mis-interpret normal findings or normal variants as pathology leading to a wrong clinical recommendation being made, and to wrong treatment. This was inversely scored, so that the trainee received full credit for making no overcall. However, the cumulative score (see below) was reduced for every overcall made. The significance of this measure is that overcalls can be a sign of “interpretive insecurity” or incomplete/poor training, in that a trainee unsure of themselves may provide multiple diagnoses for a scan in an effort to catch the true diagnosis in a broad list of possibilities.

3-score aggregate and cumulative score

We developed a 3-score aggregate that was the weighted sum of the three scores: Acute diagnosis score, unrelated secondary diagnosis score, and score for incidental findings with weights of 80%, 10%, and 10% respectively. Thus the 3-score aggregate could range from 0 to 100. Since the acute diagnosis is the key interpretation finding that we expect an on-call trainee to diagnose promptly in order to accurately guide patient management, we gave the acute diagnosis score an 80% weight in the 3-score aggregate. The unrelated secondary diagnosis and missed incidental findings were given a 10% weight each, since those were findings that were still relevant in evaluating a trainee’s skills but not as significant in the acute care setting. In cases where there was no secondary diagnosis or no incidental finding the corresponding 10% weight was added to the acute diagnosis score so that the maximum possible score remained 100.

$$\eqalign{&{\bf{3\text{-}score}}\,{\rm{ }}{\bf{aggregate}}{\rm{ }} \cr &\quad = \,{\bf{80}}\,*\,{\bf{Acute}}\,{\rm{ }}\,{\bf{diagnosis}}{\rm{ }}\,{\bf{score}}{\rm{ }} \cr & \quad+ \,{\bf{10}}\,*\,{\bf{Unrelated}}\,{\rm{ }}{\bf{secondary}}{\rm{ }}\,{\bf{diagnosis}}{\rm{ }}\,{\bf{score}}{\rm{ }} \cr & \quad+\,{\bf{10}}\,*\,{\bf{Score}}\,{\rm{ }}{\bf\,{for}}{\rm{ }}\,{\bf{incidental}}{\rm{ }}\,{\bf{findings}} \cr}$$

Similarly, we also developed a cumulative score to account for all 4 components of the interpretation of an acute abdominal CT scan: acute diagnosis, unrelated secondary diagnosis, incidental findings, and overcalls. The cumulative score is defined as the 3-score aggregate (0-100 value) minus 10 for every overcall made by the trainee in the interpretation of the case. Thus, the cumulative score of a trainee on a case could range from a maximum of 100 down to negative values if many overcalls were made.

$$\eqalign{& {\bf{Cumulative}}\,{\rm{ }}{\bf{score}}\, = \, \cr & {\bf{3}}\text{-}{\bf{score}}\,{\rm{ }}{\bf{aggregate}}\, – \,\left( {{\bf{10}}\,*\,{\bf{Number}}\,{\rm{ }}{\bf{of}}\,{\rm{ }}{\bf{overcalls}}} \right) \cr}$$

Data analysis

We used descriptive statistics to summarize continuous variables as medians with interquartile ranges (IQRs) and mean +/- standard deviation (SD). We analyzed the data using R (R Foundation for Statistical Computing, Vienna, Austria) and developed plots and heatmaps using the R package ggplot2. We fit linear regression to analyze the association of acute diagnosis and cumulative scores with length of radiology training. We considered p < 0.05 as statistically significant for all analyses. We divided the length of training into five categories (≤ 12 months, 12–24 months, 24–36 months, 36–48 months, and > 48 months) and two categories (< 24 months, and ≥ 24 months), and compared the cumulative scores, 3-score aggregate, and acute diagnostic scores of trainees. Our hypothesis was that the scores would be higher as the length of training gets longer. Similarly, we fit a model to look at the association of the number of overcalls with the training period of trainees, with a hypothesis that the number of overcalls would decrease with increased training. We also looked at the distribution of 3-score aggregate versus the number of overcalls by trainees, assuming that the scores should be inversely proportional to the number of overcalls.

Add Comment