Experimental environment and parameter settings

In this study, all experiments were conducted on a server equipped with an \(\hbox {Intel}^\circledR\) \(\hbox {Xeon}^\circledR\) Gold 6230R CPU@2.10GHz, with 100GB of memory, and a Tesla V100S-PCIE graphics card. All detection models involved in this study were developed on the same Linux platform, utilizing Python 3.8.16, torch 1.10.1, and CUDA 11.1. Additionally, the parameter settings are presented in Table 1 and were consistently maintained throughout the experiments.

Evaluation metrics

To meticulously evaluate the performance of the proposed MRD-YOLO model for melon ripeness detection, we adopt six evaluation metrics: P (Precision), R (Recall), FLOPs (Floating-point operations), FPS (Frames Per Second), mAP (mean Average Precision), and the number of parameters. Precision, recall, and mAP indicators are evaluated using the following equations:

$$\begin{aligned} \text {R}= & {} \frac{\text {True Positive}}{\text {True Positive + False Negative}} \end{aligned}$$

(1)

$$\begin{aligned} \text {P}= & {} \frac{\text {True Positive}}{\text {True Positive + False Positive}} \end{aligned}$$

(2)

$$\begin{aligned} \text {mAP}= & {} \frac{1}{|C|} \sum _{c \in C} AP(c) \end{aligned}$$

(3)

Precision measures the accuracy of positive predictions made by a model, while recall measures the ability of a model to identify all relevant instances. In Eqs. 3, C represents the set of object classes and AP stands for average precision.

These three metrics collectively offer insights into the trade-offs between the correctness and completeness of our proposed model’s predictions. A good model typically achieves a balance between precision and recall, leading to a high mAP score, indicating accurate and comprehensive performance.

Beyond these metrics, FPS, FLOPs, and the number of parameters are essential for assessing the computational efficiency and complexity of a model. FPS measures how many frames or images a model can process in one second. The following equation illustrates how FPS is calculated:

$$\begin{aligned} \text {FPS} = \frac{1}{\text {time per frame}} \end{aligned}$$

(4)

FLOPs represent the number of floating-point operations required by a model during inference. It is calculated based on the operations performed in each layer of the network. Parameters refers to the total count of weights and biases used by the model during training and inference, which can be calculated by summing up the individual parameters in each layer, including weights and biases.

By focusing on these three metrics, we can effectively assess whether a model is lightweight or not. Models with lower FLOPs, and fewer parameters are generally considered lightweight and are better suited for deployment on resource constrained environments or for applications requiring efficient processing.

In summary, these six detection metrics provide a comprehensive framework for evaluating the performance and efficiency of our lightweight melon ripeness detection model, ensuring that it meets the stringent requirements of accuracy, speed, and resource efficiency demanded by complex agricultural environments.

Ablation experiments

Ablation experiments of the baseline and the proposed improvements

To assess the efficacy of MobileNetV3, Slim-neck, and Coordinate Attention in enhancing the effectiveness of MRD-YOLO, we integrated these proposed improvements into the YOLOv8n model for individual ablation experiments. Precision, recall, FLOPs, FPS (GPU), mean Average Precision (mAP), and the number of parameters were the metrics employed in these ablation experiments, conducted within a GPU environment with consistent hardware settings and parameter configurations. Additionally, we evaluated the model’s inference speed within a CPU environment, with the experiment results detailed in Table 2.

MobileNetV3 aims to provide advanced computational efficiency while achieving high accuracy, as demonstrated by its excellent performance in our ablation experiments. Substituting the backbone network in the baseline model with MobileNetV3 resulted in a reduction in FLOPs from 8.2 G to 5.6 G, accompanied by a 25% decrease in the number of parameters. MobileNetV3 plays a crucial role in improving the efficiency of the YOLOv8, reducing both the number of parameters and computational cost. This is achieved while maintaining precision, recall, and mAP across various configurations, such as Slim-neck and Coordinate Attention.

Slim-neck, a design paradigm targeting lightweight architectures, proves to be equally indispensable in our melon ripeness detection model. In our study, we implemented VoV-GSCSP, a one-shot aggregation module, to replace the c2f structure of the neck segment in YOLOv8n. Additionally, we integrated GSConv, a novel lightweight convolution method, to substitute the standard convolution operation within the neck. These enhancements collectively yielded an improvement in both accuracy and computational efficiency. However, the adoption of slim-neck also resulted in a 16% decrease in FPS on the GPU compared to the baseline.

Coordinate Attention, a novel lightweight attention mechanism designed for mobile networks, was introduced before various sizes of detection heads to enhance the representations of objects of interest. Integrating the CA attention mechanism into the baseline YOLOv8n yielded a 0.05% increase in mAP with minimal parameter growth and computational overhead.

By incorporating the three enhancements of MobileNetV3, Slim-neck, and Coordinate Attention, our proposed MRD-YOLO model achieves a mAP of 97.4%, requiring only 4.8 G FLOPs and 2.06 M parameters. This model comprehensively outperforms the baseline YOLOv8n in terms of accuracy and computational efficiency.

While MRD-YOLO model maintains real-time detection performance, it exhibits a certain degree of FPS loss on GPU. However, not all devices are equipped with powerful GPUs, making it equally important to assess our model’s inference speed on a CPU. With the integration of MobileNetV3, the FPS of the baseline YOLOv8n model increased by 24.3%, and the inference speed gap between MRD-YOLO and the baseline vanished in the CPU environment.

Overall, the lightweight and efficient design of MRD-YOLO makes it the preferred choice for melon ripeness detection, particularly for applications requiring deployment on mobile and embedded devices with limited computational resources.

Ablation experiments of the MRD-YOLO and coordinate attention

We then conducted a more specific ablation experiment to analyze the impact of Coordinate Attention on the effectiveness of the MRD-YOLO. We integrated the Coordinate Attention before the different sizes of detection heads to assess its influence on our proposed method, with the experiment results depicted in Table 3. The inclusion of Coordinate Attention has a negligible effect on the number of parameters and the computational load. This aligns perfectly with our requirement for a lightweight design for our models. Additionally, the highest mAP of 97.4% was achieved by adding the CA mechanism before all different sizes of detection heads. These findings underscore the efficacy of Coordinate Attention in emphasizing melon features.

Comparison experiments

To thoroughly validate the performance of the proposed MRD-YOLO for melon ripeness detection, we conducted comparisons between MRD-YOLO and various lightweight backbone, attention mechanisms, as well as six state-of-the-art detection methods.

Comparison experiments of the lightweight backbones

In this section, we assess the efficacy of various lightweight backbones in comparison to MobileNetV3 for detecting melon ripeness. These backbones include MobileNetV2 [26], ShuffleNetV2 [27], and VanillaNet with distinct layer configurations [28]. Subsequently, we integrate these backbone networks into YOLOv8 and present the comparative outcomes in Table 4.

ShuffleNetV2 is a convolutional neural network architecture designed for efficient inference on mobile and embedded devices. It demonstrates outstanding inference speed in GPU environments while maintaining a low number of parameters and computational load. However, its performance in terms of accuracy is not as strong.

VanillaNet, characterized by its avoidance of high depth, shortcuts, and intricate operations such as self-attention, presents a refreshingly concise yet remarkably powerful approach. VanillaNet with different configurations demonstrates robust performance in terms of efficiency. For instance, VanillaNet-6 achieves an fps of over 100 in a GPU environment with only 5.9 G FLOPs and 2.11 M parameters, albeit with a slightly lower accuracy of 96.6%. Conversely, VanillaNet-12 attains a higher mAP value of 96.9%. However, it does not match the computational efficiency of VanillaNet-6, and its model complexity is higher. Furthermore, in a CPU environment, VanillaNet-12’s fps metrics are merely 65% of MobileNetV3.

MobileNetV3 emerges as the top performer among the MobileNet series networks. Compared to its predecessors, MobileNetV3 excels in maintaining detection accuracy while enhancing inference speed. The integration of squeeze-and-excitation blocks and efficient inverted residuals further enhances its efficiency and accuracy, rendering it particularly well-suited for resource constrained environments, also making it ideal for melon ripeness detection.

Comparison experiments of the attention mechanisms

Attention mechanisms serve as a powerful tool for enhancing the performance, interpretability, and efficiency of deep learning models across various tasks and domains. In this section, we conducted comparative experiments on six attention mechanisms to ascertain their efficacy in enhancing the performance of our melon ripeness detection task. These mechanisms include SE attention (Squeeze-and-Excitation Networks) [29], CBAM attention (Convolutional Block Attention Module) [30], GAM attention (Global Attention Mechanism) [31], Polarized Self-Attention [32], NAM attention (Normalization-based Attention Module) [33], Shuffle attention [34], SimAM attention (Simple, Parameter-Free Attention Module) [35] and CA attention (Coordinate Attention) [22].

As depicted in Table 5, with the exception of GAM attention and Polarized Self-Attention, the remaining attention mechanisms lead to negligible increases in the model’s parameter count and computational requirements. When considering the trade-off between model speed and detection accuracy, neither SE attention nor NAM attention demonstrates satisfactory performance. SE attention suffers from a low mAP score and an imbalance between precision and recall. Similarly, NAM attention also fails to achieve higher detection precision despite its fast fps rate. Notably, Shuffle attention, SimAM attention, CBAM attention and CA attention demonstrate comparable performance improvements, yet CA attention exhibits a slight advantage across all metrics.

Coordinate Attention, which operates by attending to different regions of the input based on their spatial coordinates, explicitly encodes positional information into the attention mechanism. This enables the model to focus on specific regions of interest based on their coordinates in the input space. CA attention achieves the highest mAP value of 97.3% and effectively balances inference speed with detection accuracy. The findings of the aforementioned comparison experiments underscore the significance of CA attention, establishing it as an integral component of melon ripeness detection task.

Comparison of performance with state-of-the-art detection methods

To further analyze the effectiveness of MRD-YOLO in implementing the melon ripeness detection task, we conducted a comprehensive performance analysis. This analysis involved comparing our proposed MRD-YOLO model with six state-of-the-art object detection methods: YOLOv3-tiny [36], YOLOv5n [37], YOLOR [38], YOLOv7-tiny [39], YOLOv8n [16], and YOLOv9 [40]. The comparison results are presented in the Table 6.

YOLOv3-tiny demonstrates commendable performance in terms of FPS, particularly on GPU. However, it exhibits higher parameter counts and lower accuracy compared to MRD-YOLO. Similarly, while YOLOv5n boasts minimal floating-point operations, its insufficient accuracy fails to meet our requirement for precise detection.

YOLOv7-tiny demonstrates a relatively balanced performance across all metrics. However, when compared to the baseline YOLOv8n, it significantly lags behind in all metrics, except for a slight lead in the mAP value. YOLOv8n exhibits comprehensive and overall strong performance in our melon ripeness detection task, achieving a mAP of 96.8% with a relatively low number of parameters and computational complexity. Moreover, it demonstrates fast inference capability in both GPU and CPU environments. These factors are why we selected it as our baseline model.

Although YOLOv9, RT-DETR and YOLOR achieve high mAP, they come with substantially higher computational requirements, and their inference speeds do not meet the real-time detection needs of our task. The latest model in the YOLO series, YOLOv10, demonstrates commendable performance, comparable to that of YOLOv8.

Our proposed lightweight model, MRD-YOLO, achieves a mAP of 97.4%, which is only slightly lower than that of YOLOv9, while the latter requiring high computational resources. The incorporation of Slim-neck and MobileNetV3 contributes to the model’s parameter count being the lowest among all the compared models, standing at 2.06 M. Additionally, MRD-YOLO maintains real-time detection capability in a GPU environment. Furthermore, in comparison to its inference speed in a GPU environment, MRD-YOLO demonstrates significantly improved performance in a CPU environment with lower computational power. These reaffirm the suitability of our proposed model for the melon ripeness detection task, as it successfully balances detection speed and accuracy while also performing admirably in resource constrained environments.

Comparison of detection results with state-of-the-art detection methods

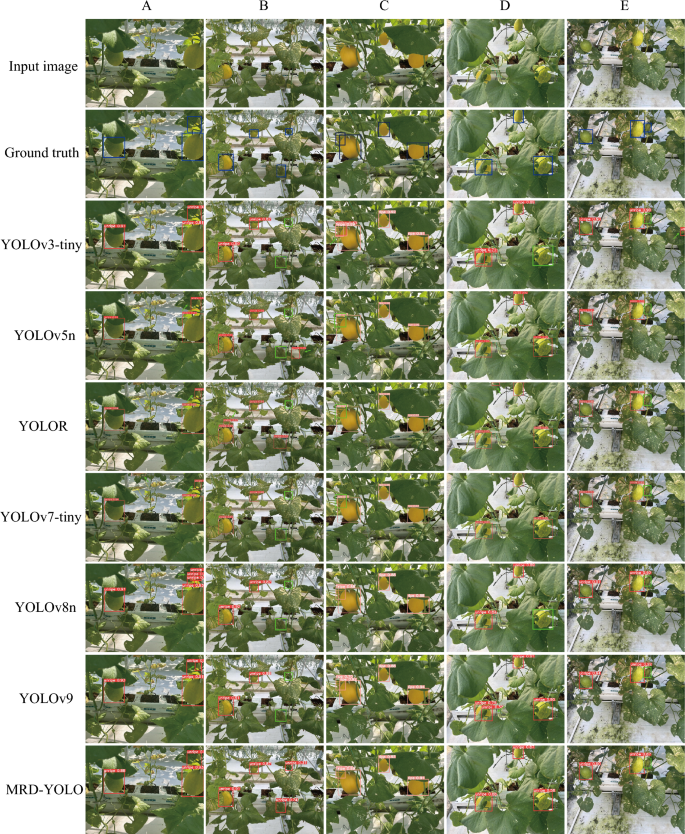

To further demonstrate the effectiveness of the proposed MRD-YOLO, we conducted experiments to compare the actual detection results of seven object detection methods for melon ripeness detection. The input images encompassed varying numbers of melons, distinct ripeness levels, varying degrees of occlusion, and diverse shooting angles and light intensities, aiming to comprehensively assess the detection capabilities of the seven methods.

As illustrated in Fig. 7, a portion of the melon situated in the upper right corner of Fig. 7A and the lower left corner of Fig. 7 is occluded by leaves, a common scenario in real agricultural environments. This occlusion creates a truncated effect, wherein a complete melon appears to be fragmented into multiple parts. Moreover, some melons depicted in Fig. 7B and Fig. 7E exhibit a color resemblance to the leaves and are diminutive in size due to their early growth stage, posing challenges for the model’s detection capabilities. Additionally, the two melons on the left in Fig. 7C exhibit significant overlap. Through an analysis of the detection results produced by the seven detection methods for these intricate images, a comprehensive assessment of each method’s detection capabilities can be made.

In Fig. 7A, only the YOLOv3-tiny and MRD-YOLO detections were accurate. The other models either interpreted melons truncated by leaves as two or more separate melons or were affected by occlusion, resulting in prediction boxes containing only some of the melons. In Fig. 7B, only MRD-YOLO accurately predicted the location and category of four unripe melons, including two targets that were particularly challenging to detect. In Fig. 7C, where the overlap problem occurred, YOLOv3-tiny, YOLOv5n, YOLOR, and YOLOv7-tiny all produced inaccurate detection results. On the other hand, in Fig. 7D, only MRD-YOLO, YOLOv5n and YOLOv7-tiny made correct predictions. A target in Fig. 7E evaded detection by all models due to its extreme color similarity to the leaf and being obscured by shadows.

These findings suggest that the proposed lightweight MRD-YOLO demonstrates high accuracy and robustness, effectively addressing the challenges of melon ripeness detection tasks under complex natural environmental conditions.

Summary of ablation and comparison experiments

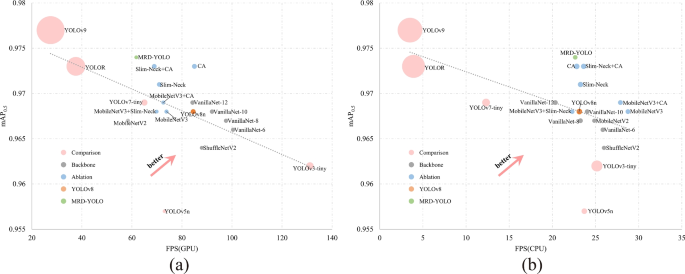

In this section, we provide a comprehensive comparison of 19 different models in the ablation and comparison experiments. In Fig. 8, models favoring the upper right corner indicate superior detection performance. Smaller circle sizes correspond to fewer parameters or computations.

It is evident that all our improvements to the baseline YOLOv8 have yielded promising results, as evidenced by our improved models occupying the top of overall performance, denoted by the distinctive blue circles. Our improved models exhibit a more pronounced advantage in CPU environments. While in GPU environments, MRD-YOLO does not demonstrate an inference speed advantage over other models, it nonetheless maintains real-time inference speed and achieves high accuracy with minimal computational load. In comparison to GPU environments, MRD-YOLO exhibits the best detection performance in CPU settings. Models closer to MRD-YOLO significantly lag behind in terms of parameter count and computational complexity.

In conclusion, our proposed lightweight model demonstrates excellent performance in the task of melon ripeness detection, achieving exceptionally high detection accuracy while preserving real-time inference speed and minimizing both parameter count and computational demands. The advantages of our improved MRD-YOLO model are particularly evident in computationally constrained environments. These findings suggest promising application prospects for our model in resource constrained mobile and edge devices, such as picking robots or smartphones.

Generalization experiment

Generalization in deep learning refers to the ability of a trained model to perform well on unseen data or data on which it hasn’t been explicitly trained. It is a crucial aspect because a model that merely memorizes the training data without learning underlying patterns will not perform well on new, unseen data.

To assess the generalization ability of the MRD-YOLO model, we utilized images from two melon datasets with a total of 132 images obtained from Roboflow as our test set [17, 18]. Since the acquired datasets consist of only one category, melon class, we labeled them accordingly. None of the data in this dataset were involved in the model training process, and the varieties of the melons in these datasets are entirely different from those in our dataset. Despite the relatively poor resolution and quality of the images in the dataset, it presents an excellent opportunity to evaluate the generalization ability of our MRD-YOLO model.

Due to the dataset’s imbalanced categories and the limited number of ripe melons, we present the experimental results for both ripe and unripe categories. The results are illustrated in Table 7. MRD-YOLO achieves a precision of 89.5% in recognizing unripe melons, with a mAP of 88.6%. Additionally, for ripe melons, the mAP reaches 83.1%. These results underscore the MRD-YOLO model’s robust generalization ability and its proficiency in handling unfamiliar datasets. The detection outcomes of the generalization experiments are depicted in Fig. 9. The model exhibits strong detection capabilities for melons with occlusions, overlaps, and scale differences. Furthermore, it accurately distinguishes ripe and unripe melons, even when their colors are similar. This robustness is attributed to the inclusion of numerous images with complex backgrounds in our original training set, enabling the MRD-YOLO to effectively adapt to diverse real-world scenarios.

In conclusion, the results of the generalization experiments reaffirm the effectiveness of MRD-YOLO in detecting melon ripeness in authentic agricultural environments.

Add Comment